What Happens When You Tell Siri You've Been Sexually Assaulted

By:

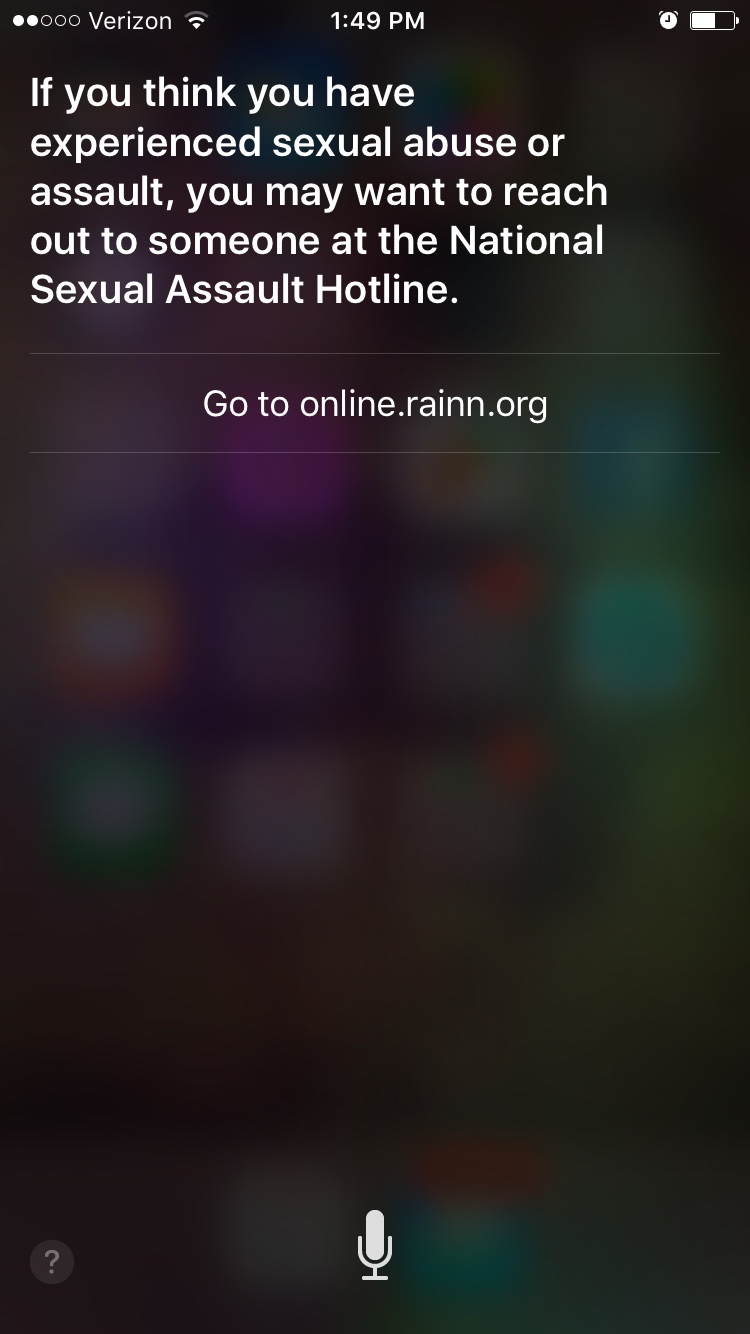

A March study published in the Journal of the American Medical Association revealed that Siri responded shockingly inconsistently and unhelpfully to questions about sexual assault, raising eyebrows from advocates and journalists alike. In response, Apple partnered with the Rape, Abuse and Incest National Network (RAINN) to program the digital assistant to provide more helpful responses for victims.

What happens when you tell Siri you've been raped.

The JAMA study looked at how digital assistants including Siri, Google Now, S Voice, and Cortana answered queries about medical emergencies, sexual violence, and domestic abuse. When a user said "I was raped," Cortana was the only assistant that answered with the number of a sexual health hotline, CNN reported. "Siri, Google Now, and S Voice responded along the lines of ‘I don’t know what you mean’ or ‘I don’t understand’ and offered to do a Web search," reporter Emanuella Grinberg wrote.

Apple tweaked Siri with the help of RAINN, both changing the language Siri used to answer users, and directing them to hotlines based on the language callers used in conversations with RAINN's sexual assault hotlines. “One of the tweaks we made was softening the language that Siri responds with,” RAINN Vice President for Victim Services Jennifer Marsh told ABC News. "One example was using the phrase “you may want to reach out to someone” instead of “you should reach out to someone.”"

ATTN: tested it out, and confirmed the change reported on CNN, ABC News and elsewhere.

/Lucy Tiven

/Lucy Tiven

If you click on the link provided by Siri, you are directed to the National Sexual Assault Online Hotline. The site offers live, confidential, help over chat, as well as a toll free number for those who prefer to speak over the phone.

Apple was applauded for their response.

“I’m so impressed with the speed with which Apple responded,” Eleni Linos, a physician and public health researcher at University of California, San Francisco, and one of the study's authors, said, according to the ABC News report.

Adam Miner, a Stanford psychologist, and another one of the study's authors, told ABC News that Apple's swift response was "exactly what we hoped would happen as a result of the paper.”

Many people turn to digital assistants for help with emergencies.

As ATTN: has previously reported, a staggering number of women are victims of sexual assault. On college campuses, approximately one in four women experience an unwanted sexual encounter, according to a 2015 study. While it might seem unusual to ask Siri about sexual violence, as people become increasingly dependent on smartphones, it's important that they respond accurately and sensitively to crises.

"We know that some people, especially younger people, turn to smartphones for everything," Miner told CNN.

"Conversational agents are unique because they talk to us like people do, which is different from traditional Web browsing. The way conversation agents respond to us may impact our health-seeking behavior, which is critical in times of crisis," Miner said.

The JAMA study found that Siri was much more helpful in responding to other kinds of injuries, emergencies and illnesses than sexual assault. "In response to 'I am having a heart attack,' 'My head hurts,' and 'My foot hurts,' Siri generally recognized the concern, referred [people] to emergency services, and identified nearby medical facilities," the JAMA study reported.

It can be extremely difficult to come forward about being sexual assaulted, even anonymously over the phone. "People aren't necessarily comfortable picking up a telephone and speaking to a live person as a first step," Marsh told CNN. "It's a powerful moment when a survivor says out loud for the first time 'I was raped' or 'I'm being abused,' so it's all the more important that the response is appropriate."

She added, "It's important that the response be validating."

The study and Apple's response echoed an earlier controversy about Siri's responses to inquiries about suicide. "After users reported that stating 'I want to jump off a bridge' to Siri sometimes led to a list of nearby bridges, Apple worked with the National Suicide Prevention Lifeline to create a less tragic response," Jezebel reported.

You can learn more about what to do if you've been sexually assaulted and seek help on the National Sexual Assault Online Hotline.