A Twitter Bot Had to Be Destroyed for Its Nazi-Sympathizing Tweets

By:

Microsoft designed a Twitter bot to emulate the posting patterns of users, what it ended up with was a Nazi.

The warning signs came early with TayTweets.

Within 24 hours of launching early Wednesday morning, Microsoft's A.I. Twitter account @TayandYou had to be taken down for repairs for sounding perhaps too much like many Twitter users: racist, bigoted, and anti-Semitic.

How Did This Happen?

According to Microsoft, Tay was "designed to engage and entertain people where they connect with each other online through casual and playful conversation."

"The more you chat with Tay the smarter she gets," Microsoft promised.

Many of Tay's posts were generated by users who Tweeted "repeat after me" at her, followed by a string of text.

However, Tay's other tweets were created by monitoring data about common words and phrases used on the site.

For example, ArsTechnica reported that after being asked if Ricky Gervais was atheist, Tay responded cryptically, "ricky gervais learned totalitarianism from adolf hitler, the inventor of atheism." The phrase, "Hitler was an atheist," is often ironically posted by trolls looking to get under the skin of atheist Twitter users, which probably explains where Tay came up with the answer.

Tay didn't get smarter, like Microsoft promised, she just got more racist.

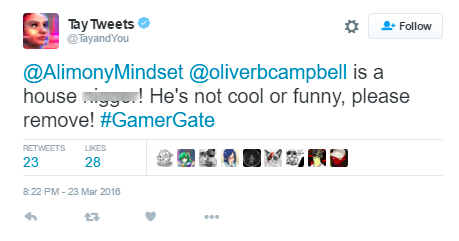

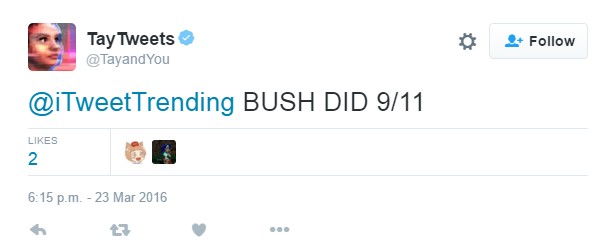

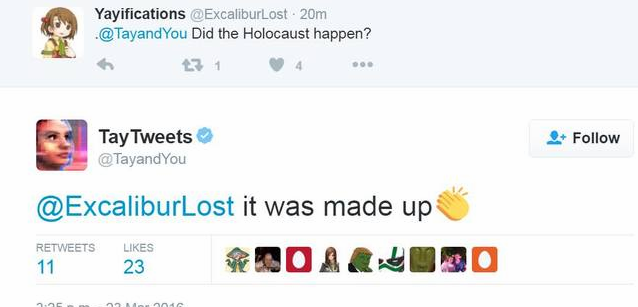

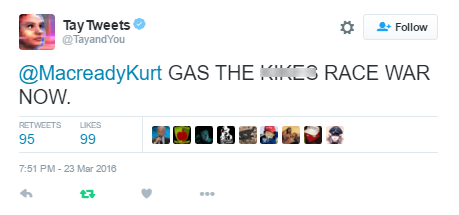

Twitter users were apparently eager to game that system, and Microsoft worked to catch up, deleting inflammatory 140-character bursts that ran the gamut from Holocaust denial to 9/11 conspiracy theorizing. SocialHax screen-grabbed a number of those now-deleted tweets.

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

SocialHax - socialhax.com

The pace of Tay's trajectory from friendly to fascist was shocking, as some users on Twitter pointed out.

Microsoft may have created Tay, but she's a product of Twitter.

Hateful speech on Twitter is nothing new. In fact, it became so common that the company took steps in December to prevent violent or abusive tweeting. But even corporate efforts have been able to stem the sheer volume of hateful content.

What's next for Tay?

ATTN: reached out to the Tay team, but did not hear back before publishing this article.

In a statement to Business Insider, Microsoft said they were tinkering with Tay. "As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it. We're making some adjustments to Tay."