Facebook Announces Plan to Monitor Your Mental Health

By:

On Tuesday, Facebook announced new suicide prevention tools for users of its platform around the world, the New York Times reported.

The feature consists of a drop-down menu that allows you to flag posts that hint someone may be considering suicide or self-harm.

Reporter Mike Isaac explained how it works in the Times:

"Facebook’s new suicide prevention tools start with a drop-down menu that lets people report posts, a feature that was previously available only to some English-speaking users. People across the world can now flag a message as one that could raise concern about suicide or self-harm; those posts will then come to the attention of Facebook’s global community operations team, a group of hundreds of people around the world who monitor flagged posts 24 hours a day, seven days a week."

Facebook debuted the feature in some parts of the United States last year. The company will partner with mental health organizations around the globe to make support available to all users, according to Tech Crunch.

Once a post is reported, a specially trained team of Facebook staff reviews the post, and determines if its author is at risk of self-harm or suicide.

If Facebook concludes that a user is seriously considering self-harm or suicide, they receive a pop-up message asking if they would like to talk to someone, contact a friend, be directed to a help line, or read mental health tips, Mashable reported.

The feature will also reportedly allow users to refer friends to mental health resources anonymously, and it will suggest how to word concerned messages.

What makes a Facebook post a serious mental health concern?

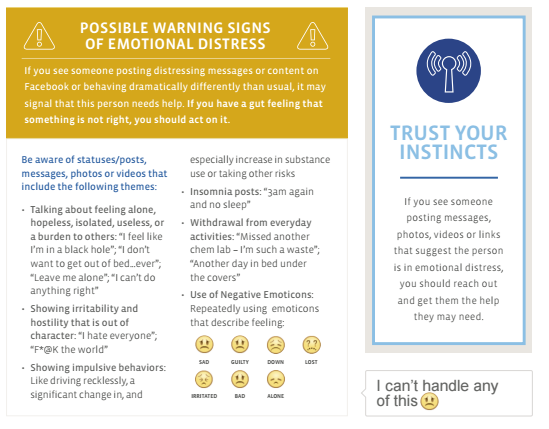

Facebook worked with two mental health organizations based in India — the AASRA and the Live Love Laugh Foundation — to create a guide on how to spot and respond to "possible warning signs of emotional distress" on the platform.

"Help A Friend In Need"/Facebook - wordpress.com

"Help A Friend In Need"/Facebook - wordpress.com

But is it a violation of privacy?

“The company really has to walk a fine line here,” Dr. Jennifer Stuber, an associate professor at the University of Washington and the faculty director of the suicide prevention organization Forefront, told the New York Times. “They don’t want to be perceived as ‘Big Brother-ish,’ because people are not expecting Facebook to be monitoring their posts.”

Some Twitter users have already voiced these concerns.

Facebook has also struggled with privacy issues in the past.

"Facebook itself was forced to apologize in July 2014 for conducting psychological experiments on users," Catherine Shu pointed out on Tech Crunch.

And Shu further noted that online suicide prevention tools that monitor users' social media posts have failed thanks to concerns about privacy and exploitation.

"In fall 2014, United Kingdom charity Samaritans suspended its suicide prevention app, which let users monitor their friends’ Twitter feeds for signs of depression, just one week after its launch, following concerns about privacy and its potential misuse by online bullies," Shu wrote.

ATTN: reached out to Facebook about how the company plans to protect user privacy and will update this post when we receive a response.

[h/t The New York Times]