How to Fight Racism Online

By:

In the aftermath of Donald Trump's election as president, people are wondering how to have productive discourse with individuals with whom they disagree. While the uptick in hate crimes and harassment since Trump was elected is its own issue, a new study has tapped into Twitter’s infamous racist problem to come up with a strategy to help fight racism online.

In 2015, a study found that 88 percent of all abusive social media posts occur on Twitter. It's no wonder the network was the ideal place to conduct an experiment on how to curb racist harassment online.

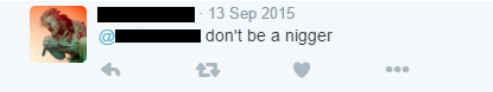

As detailed in the paper, "Tweetment Effects on the Tweeted" (an intentional play on the word "treatment") published in the journal Political Behavior, Ken Munger, a politics PhD student at New York University, scraped Twitter to find users who appeared to be white men and who tweeted the word “n**ger” at another user. He then chose the accounts that were “regularly offensive,” based on their regular usage of other offensive terms.

Ken Munger - springer.com

Ken Munger - springer.com

Munger wrote: "Because they are the largest and most politically salient demographic engaging in racist online harassment of blacks, I only included subjects who were white men." Next, Munger created bots with various identities based on the following variables: number of followers ("low-follower" meant zero to 10 followers, and "high-follower" meant between 400 and 550 followers), skin color of the cartoon avatar, username, and full name. Munger noted it was important that subjects were unaware the bot was a bot, but this didn’t prove to be an issue.

Here's the message the bots sent out to the sample of racist users on Twitter: "Hey man, just remember that there are real people who are hurt when you harass them with that kind of language."

Ken Munger - springer.com

Ken Munger - springer.com

So which bots were the most effective? According to Munger, the bots who were white with a high number of followers were the only group that significantly affected the subjects' use of racist language. The paper states the 50 subjects who interacted with that category of bots tweeted the word “nigger” an "estimated 186 fewer times in the month after treatment."

As Munger told Science of Us:

"[M]y work is trying to test ways of priming people’s offline identities and thus reminding them that what they’re doing (using extreme language to make people feel bad) violates norms of behavior except in the online communities to which they belong."

In his paper, Munger said he wants to extend this study to reduce misogynistic online harassment, which is a widespread problem, and to dissuade people from spreading false and potentially dangerous information about topics such as vaccinations.

[h/t Science of Us]